Streamlining Apache Spark: Mastering CPU and GPU Performance with ZettaProf

"Why do we need something new when we already have Spark UI?" is a question that's lingered in the minds of Apache Spark enthusiasts for some time. While Spark UI serves as a valuable tool for monitoring resources, it often leaves us craving a deeper understanding of our data processing landscape.

Questions abound:

- What's the bottleneck in our executed query?

- Which jobs or stages are consuming the lion's share of our time, and which operators are the primary suspects?

- Are there lurking stability or performance issues that require immediate attention?

- Can the Spark UI offer intelligent recommendations, or help dissect query failures?

- When it comes to joins, which algorithms should we opt for Sort-Merge Join or Broadcast Hash Join?

- How do we arrive at the optimal configuration of resources and query execution parameters?

The Spark UI, despite its merits, occasionally falls short in furnishing these vital insights and solutions. Hence, there's a growing need for a fresh approach – a Spark profiling and optimization tool that not only bridges these gaps but also extends its capabilities to unlock GPU acceleration.

In this blog, we introduce you to ZettaProf, an innovative tool that demystifies Spark profiling and helps shed light on your application's CPU dependency in the context of data processing. Moreover, we'll delve into the transformative potential of external accelerators, such as the RAPIDS Accelerator for Apache Spark, which have emerged as a game-changer for performance enhancement in data processing workflows.

Evaluating CPU and GPU potential: Can your application benefit?

Optimizing Spark applications is a journey often marked by iterative improvements that lead to enhanced performance. Workflow optimization tools like ZettaProf can help you evaluate both CPU and GPU potential by providing insights on memory usage, data skew, time skew, spills and errors.

Following is a breakdown of the typical process used to tune Spark processing performance.

1. Analyze your application

The journey begins with a meticulous analysis of your Spark application. ZettaProf examines every aspect, from CPU and memory use to potential spills and skewness.

The goal is to identify areas for improvement. If there are opportunities to optimize CPU and memory usage or eliminate major spills and skew issues, integrated tools like ZettaProf provide you with insights and recommendations. It's your green light to fine-tune your data processing application.

2. User-driven optimization

With new insights on how your application is running, you can take specific actions to optimize your Spark workflow for efficiency. These actions could involve:

- Implementing ZettaProf Recommendations: Act on ZettaProf's guidance to address bottlenecks, optimize resource usage, and improve query performance. This might include adjusting query execution plans, optimizing cache usage, or enhancing data shuffling strategies.

- Fine-Tuning Application Code: Based on ZettaProf's analysis, you can refine your application code to reduce unnecessary computations and improve overall efficiency.

3. NVIDIA Accelerated Spark Qualification Tool

With your application refined, it's time to explore the world of GPU acceleration. ZettaProf seamlessly integrates with the NVIDIA Accelerated Spark Qualification Tool, which is designed to evaluate the potential benefits of GPU acceleration for your application.

4. Analyzing GPU potential

The NVIDIA Accelerated Spark Qualification Tool takes a close look at your now-optimized application. It assesses whether GPU acceleration can bring significant performance improvements. If the tool detects potential benefits, it's time to consider the shift to GPU processing.

5. User update

ZettaProf keeps you in the loop throughout this journey. If the NVIDIA Spark Qualification Tool identifies opportunities for GPU acceleration, you'll be promptly informed. It's a crucial moment where you can make an informed decision about transitioning to GPU processing.

This iterative approach ensures that your Spark application undergoes a thorough optimization journey. ZettaProf guides you from the initial analysis, through user-driven improvements, and finally, to assessing GPU potential. It's a path that maximizes your application's performance while giving you the autonomy to make informed choices.

In the next sections, we'll dive deeper into how ZettaProf simplifies configuration and fine-tuning to achieve the best results.

Technical Walkthrough

In the previous sections, we've taken you on a journey through the optimization of Spark applications, leveraging the power of both CPU and GPU resources with the help of ZettaProf. Now, let's bring it all together into a comprehensive step-by-step guide with execution details.

Evaluation setup: Workload and machine details

Before we dive into the steps, it's essential to understand the foundation of our journey. We employed a robust evaluation setup, including:

- Workload: We used the TPC-DS benchmark, ranging from queries 1 to 25. This diverse workload helped us uncover optimization opportunities across various scenarios.

- Machine Details: Our infrastructure consists of a machine with 128 cores, 512 GiB of RAM, and 4xA100 GPUs. This powerful setup formed the canvas on which we painted our optimization story.

Step 1: Analyze Spark application with ZettaProf

Our journey began with a meticulous analysis of our Spark application using ZettaProf. This analysis encompassed every aspect, from CPU and memory utilization to potential spills and skewness. The primary goal was to identify areas ripe for improvement. Whenever opportunities arose to enhance CPU and memory utilization or mitigate spills and skew issues, ZettaProf provided us with precise insights and recommendations.

Experiment 1: Using the default Spark configuration

For the initial configuration, our Spark application was running with 1 driver, 20GB of RAM, 5 cores, and 1 executor with 480GB of RAM and 120 cores. Our application took 44 minutes to complete when using this default Spark configuration.

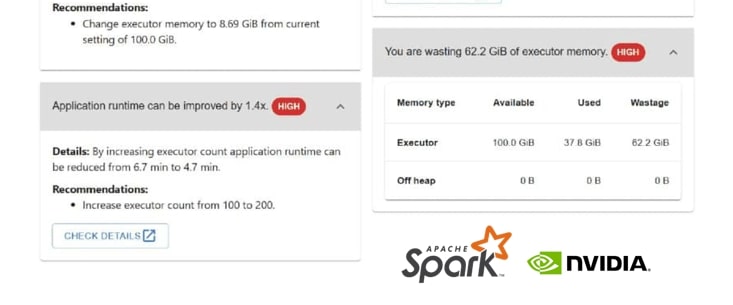

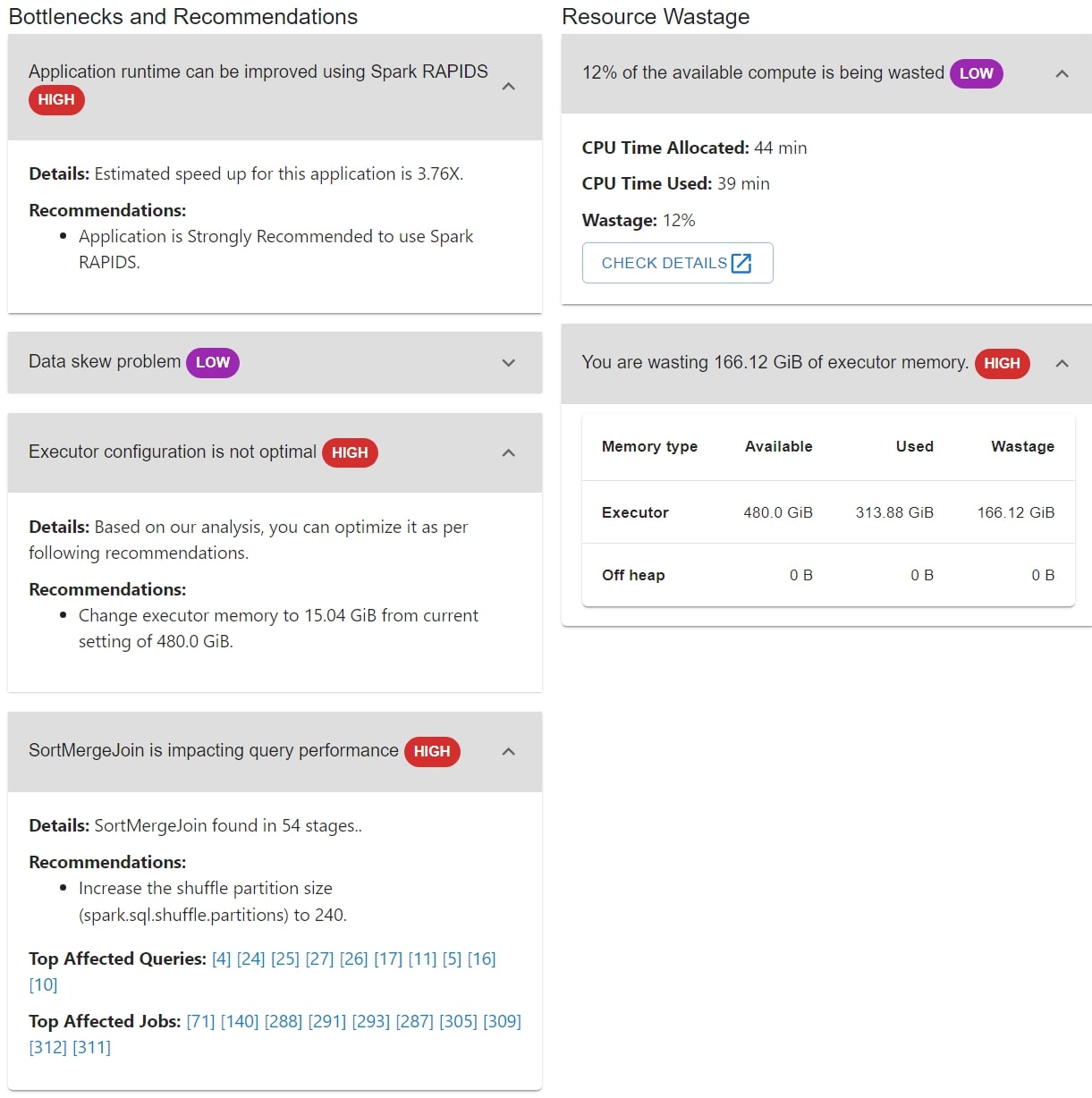

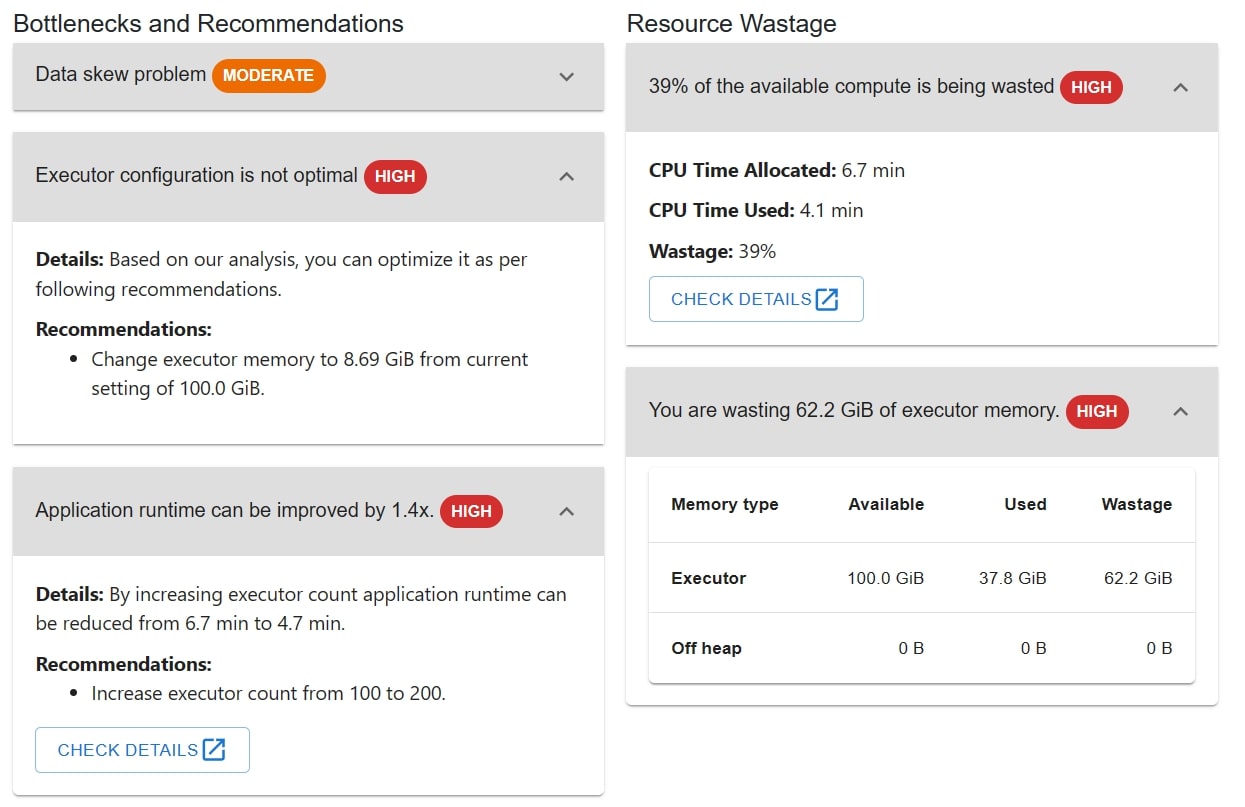

ZettaProf identified several bottlenecks, including data skew problems and suboptimal executor configurations. The recommendations included using the RAPIDS Accelerator to address data skew with salting, and optimize executor memory (Figure 1).

Recommendations

Based on ZettaProf's insights, Figure 2 highlights the recommended settings for optimizing our Spark environment:

These optimizations include:

- spark.executor.cores: Adjust from the current 120 to 5.

- spark.executor.instances: Increase from 1 to 24.

- spark.executor.memory: Reduce from 480.0 GiB to 15.04 GiB.

- spark.sql.shuffle.partitions: Increase from 200 to 240.

- spark.driver.memory: Adjust from 20.0 GiB to 15.04 GiB.

- spark.serializer: Switch from "org.apache.spark.serializer.JavaSerializer" to "org.apache.spark.serializer.KryoSerializer."

Step 2: Act on ZettaProf recommendations to optimize CPU

Next, our primary objective is to fine-tune our CPU usage to its maximum potential.

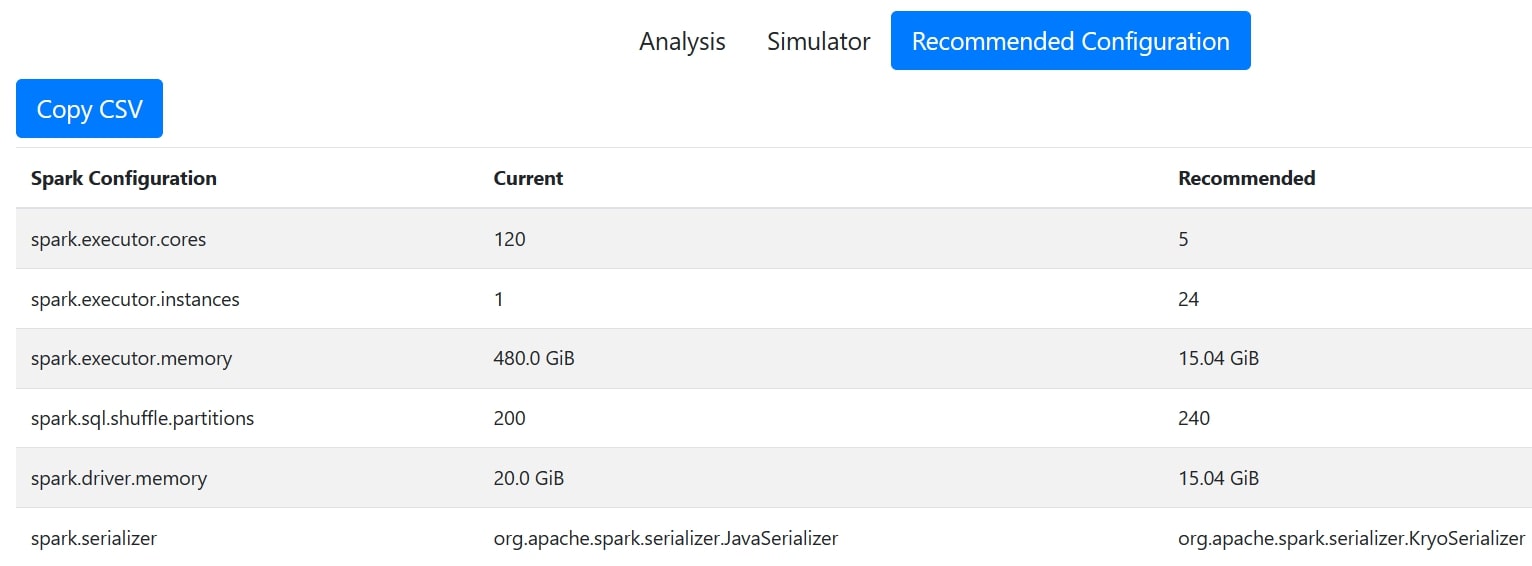

Experiment 2: CPU-optimized and GPU potential

With guidance from ZettaProf, we transitioned to a new configuration that included 1 driver, 15.04GB of RAM, 5 cores, and 1 executor with 15.04GB of RAM and 24 cores (Figure 3).

With optimized settings, our Spark application's runtime reduced significantly, completing the workload in 28 minutes from the initial 44 minutes–a remarkable 1.5X improvement. Note that once you optimize CPU and memory and address data skew issues, the next opportunity to gain further speedup is GPU acceleration.

Step 3: Explore the world of the RAPIDS Accelerator for Apache Spark

Once we optimize CPU resources to their maximum potential, we can now explore and introduce GPU-acceleration capabilities.

Experiment 3: Leveraging GPU resources

In this setup, we employed a third configuration comprising 1 driver with 20GB of RAM and 5 cores and 4 executor instances, each with 8.69GB of RAM and 25 cores.

By harnessing the power of GPU resources, our application's runtime was further reduced to 6.7 minutes (Figure 4).

ZettaDiff: Your Optimization Companion

By providing a comprehensive comparative analysis of your configurations, ZettaDiff empowers you to make data driven decisions. Whether you're fine-tuning CPU resources or transitioning to GPU utilization, ZettaDiff's insights are invaluable. It's the tool that ensures you're not just optimizing but optimizing intelligently.

Summary Section: This section provides a high-level overview of the performance comparison between different Spark application configurations or executions. It offers a quick glance at key metrics, such as runtime, CPU usage, memory utilization, and more, allowing users to assess which configuration is performing better.

Operation Section: In this section, users can dive deeper into the analysis of individual operators within their Spark applications. It highlights how each operator contributes to the overall performance, helping users pinpoint bottlenecks and areas for improvement. This granular view assists in fine-tuning specific aspects of the application.

Configuration Section: The Configuration section focuses on the technical details of each configuration being compared. Users can access information about Spark settings, memory allocation, CPU cores, and other configuration parameters. This insight aids in understanding how different settings impact the application's performance and resource utilization.

Comparing runtime and bound types

One of the fundamental aspects of optimizing Spark applications is runtime reduction. With ZettaDiff, we can easily compare the runtime of different configurations. In our journey, we started with an unoptimized CPU configuration that took 44.34 minutes to complete (TPCDS_DEFAULT_CPU_1_25). After fine-tuning based on ZettaProf recommendations, we achieved a significant improvement, completing the workload in just 28.35 minutes (TPCDS_OPT_CPU_1_25). Finally, by harnessing the power of GPUs, our runtime reduced drastically to just 6.7 minutes (TPCDS_W_1_25). This progression showcases the tangible benefits of optimization.

Operator durations and configurations

Analyzing operator durations is vital for pinpointing bottlenecks in your Spark application. ZettaDiff allows us to compare the time spent on various operators, enabling us to focus our optimization efforts where they matter most. Our journey showed remarkable reductions in operator durations as we optimised our configurations.

The configurations reveal the evolution of our setup. From default Spark settings, we adapted to more efficient configurations based on ZettaProf's advice. Finally, we embraced GPU utilisation, significantly altering our configuration to harness this powerful resource. ZettaDiff plays a pivotal role in understanding these configuration changes and their impact.

Summary

Our evaluation setup began with TPC-DS benchmarking. ZettaProf's analysis identified bottlenecks, leading to CPU optimization, which reduced runtime to 28 minutes. GPU exploration achieved the same runtime, while ultimate optimization with GPUs reached 6.7 minutes.

ZettaDiff aids configuration visualisation, emphasising CPU-GPU balance for informed, data-driven, and iterative performance enhancements.

Getting Started with ZettaProf

Data engineers working with Spark often face challenges in optimizing performance. These include identifying bottlenecks, managing resources effectively, addressing data and time skew issues, reducing spills and errors, and selecting the right join algorithms and configurations. Traditional tools lack intelligent optimization recommendations. ZettaProf provides holistic insights, simplifying optimization by pinpointing issues, offering resource guidance, and delivering intelligent suggestions. This streamlines the process and empowers engineers to maximize Spark applications' potential.